A Comprehensive Overview of the Grand Unified Theory in Physics

Written on

By Lawrence M. Krauss

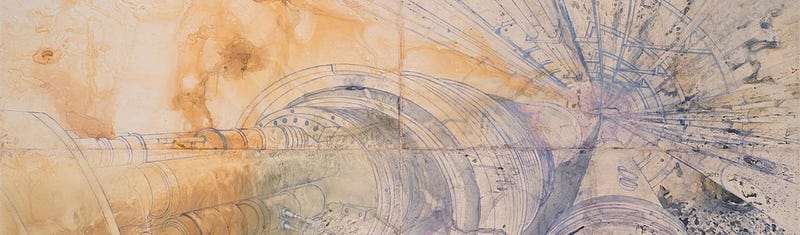

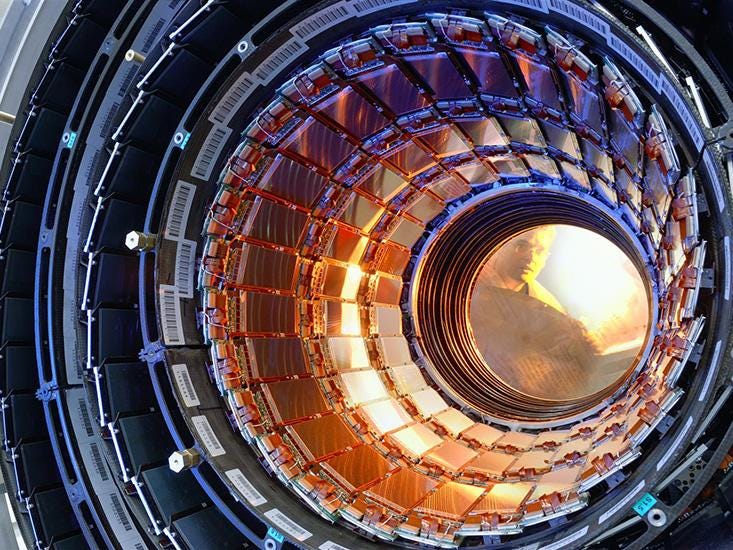

Particle physicists faced two significant fears before the Higgs boson was found in 2012. The first concern was that the Large Hadron Collider (LHC) might yield no results at all, potentially marking the end of large-scale particle accelerators designed to explore the universe's fundamental structure. The second worry was that the LHC would only confirm the Higgs boson, as theorized by Peter Higgs in 1964, without revealing anything further.

Every time we uncover a new layer of reality, it often leads to even more inquiries. Major scientific breakthroughs typically generate additional questions alongside answers, yet they usually provide a framework to guide our search for solutions. The successful identification of the Higgs boson and the subsequent affirmation of the pervasive Higgs field throughout space validated many bold scientific advances of the 20th century.

However, Sheldon Glashow's observation remains pertinent: the Higgs acts like a toilet, concealing messy details we prefer to overlook. The Higgs field interacts with most elementary particles in transit, imparting a resistive force that slows them down and gives them mass. Consequently, the masses of elementary particles we measure are somewhat illusory—merely a product of our unique perspective.

Though this idea is aesthetically pleasing, it serves as a makeshift addition to the Standard Model of physics, which explains three of the four known natural forces and their interactions with matter. The Higgs mechanism was integrated into the model to accurately reflect our experiences, but it isn't strictly necessary for the theory's validity. A universe could theoretically exist with massless particles and a long-range weak force, though we wouldn’t be here to ponder it. Furthermore, the specifics of the Higgs particle remain undefined within the Standard Model—it could have been significantly heavier or lighter.

This raises critical questions: Why does the Higgs exist, and how did it acquire its mass? When scientists ask "Why?", they often mean "How?". The Higgs is essential for the existence of the observable universe, but that alone does not provide a satisfactory explanation. To truly comprehend the physics underlying the Higgs is to grasp our existence. Thus, asking "Why are we here?" could be reframed as "Why does the Higgs exist?" Yet the Standard Model offers no answers.

Nevertheless, clues exist, emerging from theoretical and experimental insights. Shortly after the Standard Model's core framework was established in 1974, physicists at Harvard, including Glashow and Steven Weinberg, made intriguing observations. Glashow and Howard Georgi excelled at identifying patterns among existing particles and forces, utilizing group theory mathematics.

In the Standard Model, the weak and electromagnetic forces merge at high-energy levels into what is termed the "electroweak force." This signifies that the governing mathematics for these forces are intertwined, constrained by a common symmetry, indicating they are merely different facets of a single foundational theory. However, this symmetry is "spontaneously broken" by the Higgs field, which interacts with weak force carriers but not with those of electromagnetism, leading to the perception of two distinct forces at measurable scales—one short-range and the other long-range.

Georgi and Glashow aimed to extend this concept to encompass the strong force, discovering that all known particles and the three non-gravitational forces could fit within a single fundamental symmetry structure. They speculated that this symmetry might spontaneously break at an ultra-high energy scale beyond current experimental reach, leaving behind two unbroken symmetries that would correspond to separate strong and electroweak forces. Subsequently, at lower energy and larger distances, electroweak symmetry would fracture, creating distinct weak and electromagnetic forces.

They referred to this overarching theory as a Grand Unified Theory (GUT).

Around the same time, Weinberg and Georgi, along with Helen Quinn, observed that while the strong force weakens at smaller distances, the electromagnetic and weak forces intensify. With further calculations, they proposed that the strengths of these interactions could unify at a very tiny distance scale, approximately 15 orders of magnitude smaller than a proton’s size.

This revelation was encouraging for Georgi and Glashow's unified theory; if all observed particles unified in this manner, new particles (gauge bosons) would facilitate transitions among quarks, electrons, and neutrinos. This could imply protons might decay into lighter particles, which could be observable. As Glashow noted, "Diamonds aren’t forever."

Yet, it was also established that protons must have an extraordinarily long lifespan—over a billion billion years—since otherwise, we would not have survived childhood due to radiation from decaying protons. However, with the exceedingly small proposed distance scale associated with spontaneous symmetry breaking in Grand Unification, the new gauge bosons would possess large masses, rendering their interactions short-range and weak, meaning protons could theoretically endure for an unimaginable time.

Fueled by Glashow and Georgi's findings, excitement grew within the physics community following the success of electroweak theory, leading to aspirations for further unification. However, determining the correctness of these concepts posed a challenge, as constructing an accelerator to examine energies a million billion times greater than proton mass was implausible—such a machine would need to orbit the moon.

Fortunately, an alternative approach using probability arguments could establish limits on proton lifetimes. If the Grand Unified Theory predicted a proton lifespan of, say, a thousand billion billion billion years, placing a sufficiently large number of protons in a single detector could yield observable decay events.

Where might one locate such an abundance of protons? The answer was straightforward: approximately 3,000 tons of water. This necessitated a dark tank devoid of radioactive backgrounds, surrounded by sensitive photodetectors ready to capture flashes of light during a proton decay event. Despite the daunting nature of this task, two substantial experiments were set up—one deep underground near Lake Erie and another in a mine in Kamioka, Japan. The depth was crucial to filter out cosmic rays that could obscure proton decay signals.

Both experiments began collecting data around 1982–83. The allure of Grand Unification led the physics community to believe a signal would emerge soon, culminating in a decade of groundbreaking discoveries in particle physics and potentially another Nobel Prize for Glashow and others.

Regrettably, nature proved less accommodating. No signals emerged in the first, second, or third years. The simplest models proposed by Glashow and Georgi were dismissed. Yet, the fascination with Grand Unification persisted, leading to alternate unified theories that could explain suppressed proton decay beyond experimental limits.

As Glashow aptly put it, "The Higgs is like a toilet. It hides all the messy details we would rather not speak of."

On February 23, 1987, a pivotal event occurred that reinforced a principle I've observed: every time we open a new window on the universe, we are often taken by surprise. Astronomers witnessed the closest supernova explosion in nearly 400 years, located about 160,000 light-years away in the Large Magellanic Cloud—a small satellite galaxy of the Milky Way visible from the southern hemisphere.

If our understanding of supernovae is accurate, a significant portion of the energy released should manifest as neutrinos, even though the visible light produced renders supernovae the brightest cosmic events. Preliminary estimates suggested that large water detectors like IMB (Irvine-Michigan-Brookhaven) and Kamiokande should record about 20 neutrino events. Upon reviewing their data, IMB registered eight events within a ten-second window, while Kamiokande recorded eleven. In neutrino physics, this influx of data was remarkable, marking a maturation of the field. These 19 detections sparked a flurry of research, providing an unprecedented view into the core of an exploding star and serving as a laboratory for both astrophysics and neutrino physics.

Inspired by the notion that large proton-decay detectors could also function as new astrophysical neutrino detectors, several teams began constructing a new generation of such devices. The largest, known as Super-Kamiokande, was built in the Kamioka mine, featuring a 50,000-ton water tank surrounded by 11,800 phototubes, all maintained with the cleanliness of a laboratory. This purity was essential to mitigate external cosmic rays and internal radioactive contaminants that could mask the sought signals.

Simultaneously, interest surged in a related astrophysical neutrino signature. The sun generates neutrinos through the nuclear reactions in its core. Over two decades, physicist Ray Davis detected solar neutrinos but consistently found a rate of events about three times lower than predicted. To address this, a new solar neutrino detector was constructed in a deep mine in Sudbury, Canada, known as the Sudbury Neutrino Observatory (SNO).

Super-Kamiokande has been operational for over 20 years, undergoing various upgrades. No proton decay signals have been detected, nor have any new supernovae been observed. However, the precise measurements of neutrinos from this large detector, combined with findings from SNO, confirmed the solar neutrino deficit noted by Ray Davis is authentic and not attributable to astrophysical effects in the sun. This suggested at least one of the three known types of neutrinos possesses mass. As the Standard Model does not account for neutrino masses, this discovery indicated that new physics beyond the Standard Model and the Higgs must be at play.

Shortly thereafter, observations of high-energy neutrinos resulting from cosmic-ray protons interacting with the atmosphere demonstrated that a second type of neutrino also has mass, albeit significantly lighter than the electron. The team leaders at SNO and Kamiokande were honored with the 2015 Nobel Prize in Physics just before I penned this draft. Currently, these intriguing hints of new physics remain unexplained by existing theories.

The absence of proton decay, while disheartening, wasn't entirely unexpected. Since the proposal of Grand Unification, the physics landscape has evolved. More precise measurements of the strengths of the three non-gravitational interactions, combined with sophisticated calculations of their behavior at varying distances, revealed that if only Standard Model particles exist, the three forces will not converge at a single scale. For Grand Unification to occur, new physics must exist at energy levels beyond our current observations. The introduction of new particles could alter the energy scale where the three known interactions might unify, driving up the Grand Unification scale and consequently reducing the rate of proton decay, leading to predicted lifetimes exceeding a million billion billion billion years.

As these developments unfolded, theorists began leveraging new mathematical tools to investigate a potential new symmetry in nature, termed supersymmetry. This symmetry differs from any previously known symmetry as it connects two distinct types of particles: fermions (particles with half-integer spins) and bosons (particles with integer spins). The implication is that if this symmetry exists, for every known particle in the Standard Model, there must be a corresponding new elementary particle—each boson paired with a fermion and vice versa.

Since these new particles have not been detected, this symmetry must not manifest at the levels we experience, necessitating its breaking, which would confer substantial masses to the new particles, rendering them undetectable by any existing accelerators.

What makes this symmetry appealing is its potential link to Grand Unification. If such a theory exists at a mass scale 15 to 16 orders of magnitude higher than the proton's rest mass, it is also about 13 orders of magnitude greater than the scale of electroweak symmetry breaking. This raises the question of why such a vast difference exists between the fundamental laws of nature. Specifically, if the Standard Model Higgs is the true remnant of the Standard Model, why is the energy scale of its symmetry breaking so much smaller than that associated with the symmetry breaking of whatever new field must exist to disrupt GUT symmetry?

After three years of LHC operations, no evidence of supersymmetry has emerged.

The situation is more serious than it appears. When considering the effects of virtual particles (which appear and disappear too rapidly for direct observation), including those with potentially infinite mass, the presence of these gauge particles tends to elevate the Higgs mass and symmetry-breaking scale to align closely with the heavy GUT scale. This scenario leads to what is termed the naturalness problem: it seems mathematically unnatural to have a significant disparity between the scale at which electroweak symmetry is broken by the Higgs and the scale at which GUT symmetry is disrupted by the new heavy field scalar.

In a pivotal 1981 paper, mathematician Edward Witten argued that supersymmetry could mitigate the impact of high-mass virtual particles on the properties of the universe at accessible scales. Because virtual fermions and bosons of the same mass produce quantum corrections that cancel each other out, if every boson is paired with a fermion of equivalent mass, the overall quantum effects would be neutralized. This would mean that the influence of high-mass virtual particles on observable properties of the universe would be nullified.

However, if supersymmetry is itself broken (which it must be, or we would have observed the supersymmetric partners of ordinary matter), the quantum corrections would not completely offset each other. Instead, they would contribute to mass corrections roughly on par with the supersymmetry-breaking scale. If this scale were similar to that of electroweak symmetry breaking, it could elucidate the observed Higgs mass.

This also implies we should expect to observe numerous new particles—the supersymmetric counterparts of ordinary matter—at the scales currently accessible at the LHC.

This would resolve the naturalness problem by safeguarding Higgs boson masses from quantum corrections that could inflate them to the GUT energy scale. Supersymmetry could facilitate a "natural" and substantial hierarchy between the electroweak and Grand Unified scales.

The potential for supersymmetry to address the hierarchy problem has greatly enhanced its appeal among physicists. This prompted theorists to investigate realistic models that incorporate supersymmetry breaking and its implications. Consequently, the allure of supersymmetry surged, as its inclusion in calculations of the three non-gravitational forces' behavior across distances suggested they might converge at a singular, minuscule distance scale, reviving the feasibility of Grand Unification.

Models involving supersymmetry breaking boast another enticing feature. It was suggested, even before the top quark was discovered, that if the top quark were massive, its interactions with supersymmetric partners could yield quantum corrections to the Higgs properties, allowing the Higgs field to establish a coherent background throughout space at its currently observed energy scale—if Grand Unification were to occur at a much higher, superheavy scale. In essence, the energy scale of electroweak symmetry breaking could be generated naturally within a framework where Grand Unification transpires at a considerably elevated energy scale.

For Grand Unification to succeed, however, the existence of two Higgs bosons is necessary. Furthermore, the lightest Higgs boson must not exceed a certain mass threshold, or the theory becomes untenable.

As the search for the Higgs persisted without conclusive results, accelerators edged closer to the theoretical upper limit for the lightest Higgs boson in supersymmetric theories, estimated at around 135 times the proton's mass. If the Higgs had been excluded up to that point, it would have cast doubt on the viability of supersymmetry as a solution.

However, the Higgs discovered at the LHC has a mass of approximately 125 times that of the proton, suggesting a potential path towards a grand synthesis.

As of now, the situation remains murky. The anticipated signatures of new supersymmetric partners should be striking at the LHC; many believed the chance of discovering supersymmetry was greater than that of uncovering the Higgs. Yet, the opposite has occurred. After three years of LHC operations, there remains no sign of supersymmetry. The increasing lower limits on the masses of these supersymmetric partners are becoming concerning. If they rise too high, the scale of supersymmetry breaking may drift away from the electroweak scale, diminishing the appealing aspects of supersymmetry for resolving the hierarchy problem.

Nonetheless, all is not lost. The LHC has been restarted with higher energy levels, opening the possibility of discovering supersymmetric particles soon.

Should such particles be identified, it would have significant implications. One of the major enigmas in cosmology is the nature of dark matter, which appears to dominate the mass of visible galaxies. Its abundance suggests it cannot be composed of the same particles as ordinary matter; otherwise, predictions regarding light element creation during the Big Bang would contradict observations. Thus, physicists are reasonably confident that dark matter consists of a new type of elementary particle. But what could it be?

In most models, the lightest supersymmetric partner of ordinary matter is stable and shares properties with neutrinos, being weakly interacting and electrically neutral, meaning it neither absorbs nor emits light. Additionally, my earlier calculations indicated that the residual abundance of this lightest supersymmetric particle post-Big Bang could naturally fall within the range necessary for it to constitute the dark matter dominating galactic mass.

If this holds true, our galaxy would be surrounded by a halo of dark matter particles, including those passing through your current location. Several sensitive detectors are now being developed globally to detect these dark matter particles, modeled after existing underground neutrino detectors. Thus far, no confirmations have emerged.

We find ourselves in a pivotal moment—either the best or worst of times. A race unfolds between LHC detectors and underground direct dark matter detectors to reveal the nature of dark matter first. A successful detection from either side would usher in a new era of discovery, potentially enhancing our understanding of Grand Unification. However, if no discoveries materialize in the coming years, we might rule out the possibility of a simple supersymmetric origin for dark matter, thus undermining the entire supersymmetry framework for addressing the hierarchy problem. This would necessitate a return to the drawing board, though without new signals from the LHC, we would lack guidance on which direction to pursue to develop an accurate model of nature.

The landscape has become even more intriguing as the LHC reported a tantalizing potential signal corresponding to a new particle approximately six times heavier than the Higgs. However, this particle did not exhibit characteristics consistent with any supersymmetric partner of ordinary matter. Typically, promising hints of new signals dissipate as more data is collected, and roughly six months after the initial indication, it vanished. Had it persisted, it could have revolutionized our understanding of Grand Unified Theories and electroweak symmetry, hinting at a new fundamental force and a fresh set of particles influenced by this force. Yet, nature appears to have opted for a different course.

The absence of concrete experimental guidance or validation of supersymmetry has not deterred one faction of theoretical physicists. The elegant mathematical aspects of supersymmetry led to a revival of string theory, dormant since the 1960s when Yoichiro Nambu and others endeavored to understand the strong force as a theory of quarks linked by string-like excitations. By incorporating supersymmetry into quantum string theory, termed superstring theory, remarkable mathematical outcomes emerged, suggesting the potential to unify not just the three non-gravitational forces but all four known forces into a cohesive quantum field theory.

However, this theory necessitates the existence of additional spacetime dimensions, none of which have yet been observed. Moreover, it does not yield any predictions that can currently be tested by conceivable experiments. Recently, the theory has become significantly more complex, suggesting that strings may not even represent the central dynamical variables within the theory.

Despite these challenges, a dedicated group of talented physicists has persisted in their work on superstring theory, now known as M-theory, throughout the past three decades since its peak in the mid-1980s. Periodic claims of success arise, yet M-theory still lacks the critical component that renders the Standard Model a triumph: the ability to connect with measurable phenomena, resolve otherwise perplexing puzzles, and offer fundamental explanations for the nature of our reality. This does not imply M-theory is incorrect, but it currently remains largely speculative, albeit with sincere and well-motivated intentions.

It is crucial to remember that history suggests most cutting-edge physical theories are ultimately flawed. If they weren't, theoretical physics would be straightforward. The journey to the Standard Model required centuries of trial and error, dating back to ancient Greek science.

So here we stand. Are groundbreaking experimental insights imminent that might affirm or refute the ambitious hypotheses of theoretical physicists? Or are we on the brink of a barren phase where nature offers no clues on how to delve deeper into the cosmos's underlying structure? Time will tell, and we must adapt to the new reality, regardless of the outcome.

Lawrence M. Krauss is a theoretical physicist and cosmologist, serving as the director of the Origins Project and a foundation professor at the School of Earth and Space Exploration at Arizona State University. He has authored bestselling books, including *A Universe from Nothing and The Physics of Star Trek.

From The Greatest Story Ever Told — So Far: Why Are We Here? © 2017, Lawrence M. Krauss, published by Atria Books, a Division of Simon & Schuster, Inc. Printed by permission.

This article was originally published on Nautilus on March 16, 2017.