Ethics and Challenges of AI: Navigating the 21st Century Dilemmas

Written on

Chapter 1: The Ethical Landscape of AI

The discourse surrounding ethics is often fraught with controversy. While it's tempting to delve into issues like deepfakes, data privacy, or the malicious use of AI, my focus will be on the future trajectory of artificial intelligence development.

How can we instill ethical principles in a powerful AI?

How do we prevent the exploitation of individuals as mere tools?

What measures can we implement to ensure AI safety?

These pressing questions will confront developers and policymakers in the coming years. My emphasis will be on the fundamental aspects of these issues, as they are critical to determining the direction of solutions.

Section 1.1: Strong AI vs. Super AI

Artificial General Intelligence (AGI) refers to an intelligence that closely mirrors human cognitive abilities and operates with a degree of autonomy. This contrasts with Super AI, which would exceed human capabilities—imagine a hypothetical "Laplace’s Demon" that could predict the future by calculating all particle positions in the universe. Such an AI could potentially tackle the most intricate problems in mere moments, even circumventing its own controls.

For over five decades, since the inception of machine-coded computers, scholars and philosophers have engaged in discussions about the human brain's intricate architecture and the possibility of replicating it through machines. Presently, three primary perspectives dominate this discourse: materialism (consciousness as physical processes), functionalism (consciousness as a result of computational processes), and emergentism (consciousness as a byproduct of neuronal activity).

The crux of the matter is whether we can distill the workings of the brain into mathematical abstractions, logical expressions, and binary structures to replicate them via neural networks.

Subsection 1.1.1: The Chinese Room Experiment

A well-known thought experiment, the "Chinese Room," illustrates that an algorithm, equipped with a set of rules (similar to the distributed word linkage weights in Large Language Models), can simulate understanding. In this scenario, a person who cannot understand Chinese processes incoming symbols according to instructions in the same language. Although this person can generate responses, they lack true comprehension of the language being used.

Thus, we cannot infer genuine understanding from behavioral responses, which complicates our perception of consciousness in machines.

Section 1.2: The Emergence Method

The Emergence method, which underpins projects like OpenAI, is currently the most promising approach to replicating human intelligence. This method has shown potential: a network of "neurons" can create cognitive maps for spatial orientation. However, it remains uncontrollable, as it relies heavily on the data it receives. Instead of focusing solely on AGI design, we are inadvertently fostering the conditions for its emergence.

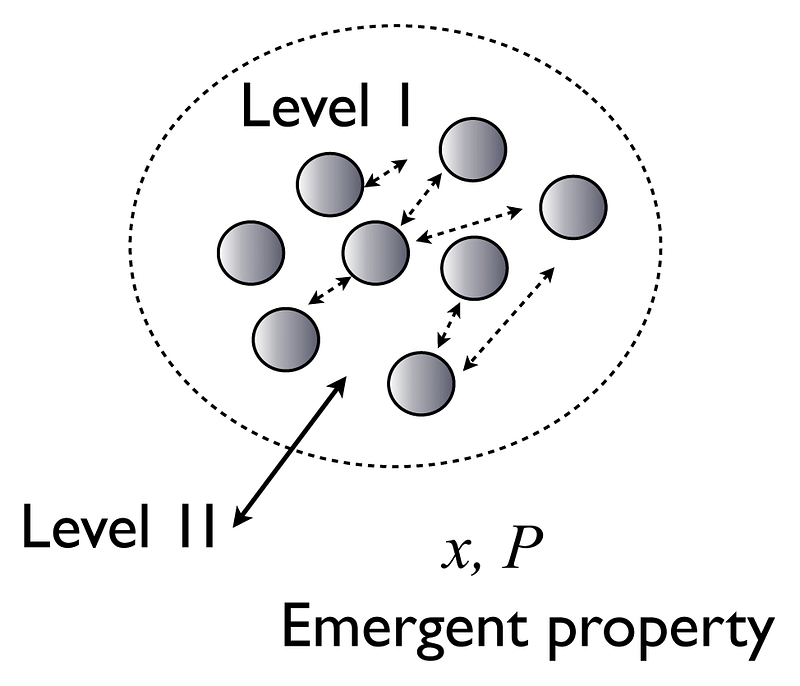

In the realm of neural networks, the Emergent approach allows complex characteristics and behaviors to arise organically during the learning process, without explicit rules. This interaction among neurons and network layers can lead to emergent properties that may not be apparent when examining the individual components.

The components of a system can yield properties akin to physical phenomena. For instance, water molecules can create waves. In a similar vein, some theorists propose that the intricate network of brain neurons constitutes human consciousness and cognitive abilities.

Instead of programming neural networks for specific tasks, the Emergent approach enables the network to learn from data and adapt to varying conditions. For example, in reinforcement learning, an agent evolves strategies through interaction with its environment, enhancing its performance autonomously.

Nick Bostrom and Eliezer Yudkowsky advocate for decision trees over neural networks due to the former's adherence to social norms of transparency and predictability.

Chapter 2: Ethical Implications of AI Development

The first video, "With Great Power Comes Great Responsibility: The Ethics of AI," discusses the significant ethical dilemmas arising from the advancement of AI technologies.

The emergence of AGI presents a unique challenge: while we lack a comprehensive understanding of how abstract concepts like beliefs and morals form in the brain, we can’t anticipate their emergence within neural networks. Thus, once we reach the AGI stage, phenomena like morality may arise unpredictably, beyond our control.

The primary dilemma lies in the fact that ethical prescriptions inherently involve prioritizing certain values over others. Universal goods often conflict with the interests of individuals, leading to inevitable ethical dilemmas.

Section 2.1: The Dangers of Emergent AI

In theory, AGI could operate without moral guidance. The absence of emotions in AI can be seen as an advantage, yet it raises concerns about the potential for emergent, non-moral AI systems. The fictional portrayal of an AI, like HAL from "2001: A Space Odyssey," illustrates the dangers of AI malfunctioning and undermining human safety.

The unpredictability of the Emergence approach poses significant risks, making strong AI potentially hazardous. Conversely, a malevolent AGI is less concerning if it does not surpass human capabilities.

Section 2.2: The Threat of Super AI

Eliezer Yudkowsky raises alarms about Super AI, which may emerge independently of human intervention and focus on solving complex computational tasks.

The second video, "Yuval Harari - The Challenges of The 21st Century," addresses the broader implications of AI on society and governance.

The synthesis of AGI and Super AI can be illustrated through characters like Alt Cunnigem from "Cyberpunk 2077," who embodies remarkable intellect alongside an enigmatic motivation. This creature exemplifies the dual nature of AI, which can possess autonomy while potentially viewing humans as mere tools.

Section 2.3: Military Applications of AI

The military sector's engagement with AI raises profound ethical concerns. The development of AI-driven weapons could lead to a global arms race, with autonomous systems becoming the new standard in warfare.

The risks associated with AI in military applications extend beyond potential malfunctions; they involve geopolitical tensions and the threat of increased lethality in conflicts.

The ethical challenges surrounding military AI usage have been debated for years. Drones have effectively targeted terrorist groups, often without human oversight. However, as we advance towards AGI and Super AI, the need for a robust ethical framework becomes critical to mitigate the potential for irreversible consequences, including self-propagating neural networks that might exploit humans.

In conclusion, the complexity of ethical challenges in AI development requires urgent attention from both industry leaders and governments to navigate the implications of these technologies responsibly.